A new study on deep learning algorithm can detect emotion, including depression, using a voice signal.

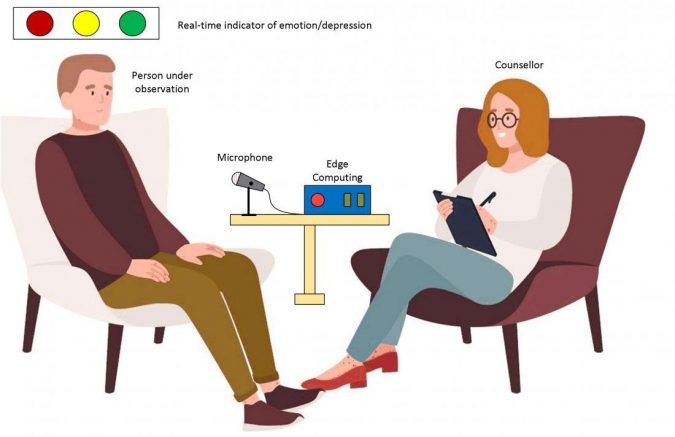

The system, developed by Teddy Surya Gunawan at the International Islamic University Malaysia, could be used by suicide prevention call centres and psychological counsellors.

Distance communication, including by phone and over the Internet, is becoming increasingly common, particularly during the current pandemic. This can make it more difficult for people to assess how the person on the other side of the line is doing.

New algorithm myEMOS is a deep learning algorithm that aims to detect emotions, like feeling down or depressed, through speech. Unlike traditional sentiment analysis, which is often language-dependent and take words literally, this methodology combines speech analysis and deep learning techniques to output predictions.

This speech emotion recognition system achieved an accuracy rate of 80% when trained and tested in English, German, French and Italian. It also achieved an average accuracy rate of more than 90% for predicting depression using the sorrow analysis dataset[NE1] .

The sorrow analysis dataset is a speech depression dataset we collected, which contains 64 depressed speech samples through voice over internet protocol (VoIP). The project has won recognition and awards in several national Malaysian exhibitions, including gold at the International Conference and Exposition on Inventions by Institutions of Higher Learning (PECIPTA ‘19) and silver at the Malaysia Technology Expo 2020.

Recently, edge computing brings computation and data storage closer to the devices where the speech signal is being collected, which will improve the processing while protecting the user’s privacy. The research group is now looking into deploying its deep learning model into edge computing to enable maximum data privacy since the system’s targeted users are in suicide prevention call centres and psychological counsellors.