Study shows how information sources affect voters.

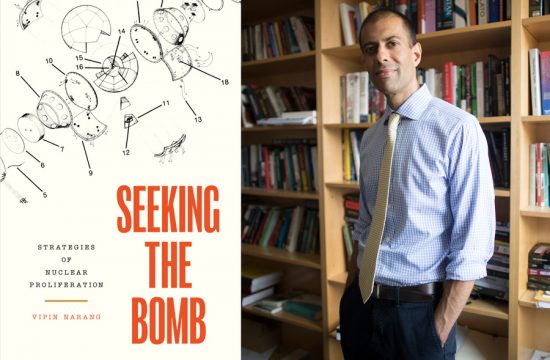

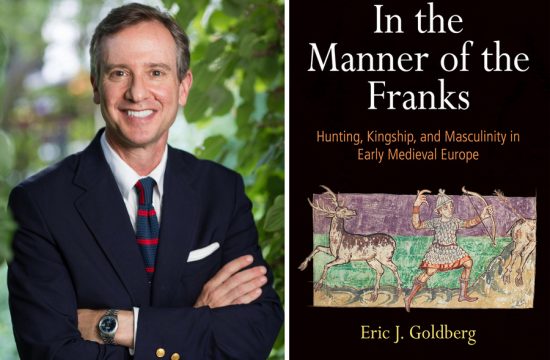

Photo: Stuart Darsch

CAMBRIDGE, Mass. — For all the fact-checking and objective reporting produced by major media outlets, voters in the U.S. nonetheless rely heavily on their pre-existing views when deciding if politicians’ statements are true or not, according to a new study co-authored by MIT scholars.

The study, conducted during the U.S. presidential primaries for the 2016 election, uses a series of statements by President Donald J. Trump — then one of many candidates in the Republican field — to see how partisanship and prior beliefs interact with evaluations of objective fact.

The researchers looked at both true and false statements Trump made, and surveyed voters from both parties about their responses. They found that the source of the claim was significant for members of both parties. For instance, when Trump falsely suggested vaccines cause autism, a claim rejected by scientists, Republicans were more likely to believe the claim when it was attributed to Trump than they were when the claim was presented without attribution.

On the other hand, when Trump correctly stated the financial cost of the Iraq War, Democrats were less likely to believe his claim than they were when the same claim was presented in unattributed form.

“It wasn’t just the case that misinformation attributed to Trump was less likely to be rejected by Republicans,” says Adam Berinsky, a professor of political science at MIT and a co-author of the new paper. “The things Trump said that were true, if attributed to Trump, [made] Democrats less likely to believe [them]. … Trump really does polarize people’s views of reality.”

Overall, self-identified Republicans who were surveyed gave Trump’s false statements a collective “belief score” of about six, on a scale of 0-10, when those statements were attributed to him. Without attribution, the belief score fell to about 4.5 out of 10.

Self-identified Democrats, on the other hand, gave Trump’s true statements a belief score of about seven out of 10 when those statements were unattributed. When the statements were attributed to Trump, the aggregate belief score fell to about six out of 10.

The paper, “Processing Political Information,” is being published today in the journal Royal Society Open Science. The co-authors are Swire, Berinsky, Stephan Lewandowsky of the University of Western Australia and the University of Bristol, and Ullrich K.H. Ecker of the University of Western Australia.

In conducting the study, the researchers surveyed 1,776 U.S. citizens during the fall of 2015, presenting them with four true statements from Trump as well as four false ones.

After correcting the false statements, the scholars also asked the survey’s respondents if they were less likely to support Trump as a result — but found the candidate’s factual issues were largely irrelevant to the respondents’ voting choices.

“It just doesn’t have an effect on support for him,” Berinsky says. “It’s not that saying things that are incorrect is garnering support for him, but it’s not costing him support either.”

The latest study is one in a series of papers Berinsky has published on political rumors, facticity, and partisan beliefs. His previous work has shown that, for instance, corrections of political rumors tend to be ineffective unless made by people within the same political party as the intended audience. That is, rumors about Democrats that are popular among Republican voters are most effectively shot down by other Republicans, and vice versa.

In a related sense, Berinsky thinks, solutions to matters of truth and falsehood in the current — and highly polarized — political moment may need to have a similar partisan structure, due to the blizzard of claims and counterclaims about truth, falsehoods, “fake news,” and more.

“In a partisan time, the solution to misinformation has to be partisan, because there just aren’t authorities that will be recognized by both sides of the aisle,” he says.

“This is a tough nut to crack, this question of misinformation and how to correct it,” he adds. “Anybody who tells you there’s an easy solution, like, ‘three easy things you can do to correct misinformation,’ don’t listen to them. If it were that easy, it would be solved by now.”