From the kitchen to the metro, new robotic systems designed to assist the elderly, people with disabilities

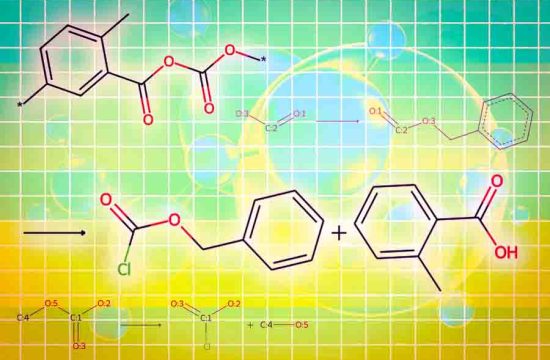

Credit: Xiaoli Zhang, Colorado School of Mines

Many new robots look less like the metal humanoids of pop culture and more like high-tech extensions of ourselves and our capabilities.

In the same way eyeglasses, wheelchairs, pacemakers and other items enable people to see and move more easily in the world, so will many cutting-edge robotic systems. Their aim is to help people be better, stronger and faster. Further, due to recent advances, most are far less expensive than the Six Million Dollar Man.

Greater access to assistive technologies is critical as the median age of the U.S. population rises. Already, there is an enormous need for such tools.

“The number of people with partial impairments is very large and continues to grow,” says Conor Walsh, a roboticist at Harvard University who is developing soft robotics technologies. “For example, these include people who are aging or have suffered a stroke. Overall, about 10 percent of individuals living in the U.S. have difficulty walking. That’s a tremendous problem when you think about it.”

Walsh and other researchers funded by the National Science Foundation (NSF) are working in labs across the country to ensure these technologies not only exist, but are reliable, durable, comfortable and personalized to users.

Their projects are examples of broader, long-term federal investments in robotics-related fundamental engineering and science research intended to improve the safety and well-being of people everywhere.

Blind travelers

Credit: Carnegie Mellon University

Imagine trying to get around the busy, noisy L’Enfant Plaza transit station in Washington, D.C. without the ability to see. L’Enfant Plaza station has two levels for five different Metro lines and a third level for commuter rail service.

Commuting is stressful for anyone. But for people with visual impairments, one of the big challenges in traversing complex buildings and transit stations such as L’Enfant is that there is not enough funding to provide human assistance to those need it at all times of day and across a whole building or space, says Aaron Steinfeld, NSF-funded roboticist at Carnegie Mellon University.

“Assistive robots can extend the reach of employees and service providers so visitors can receive help 24/7 anywhere in the building,” he says.

Steinfeld and his colleagues are designing cooperative robots, or co-robots, to empower people with disabilities to safely travel and navigate unfamiliar environments. The team focuses on information exchange, assistive localization, and urban navigation — essentially finding new ways for robots and humans to interact.

Transportation in particular is a major limiting factor in the lives of people with disabilities, affecting their access to work, health care and social events, according to Steinfeld.

“For a person who is blind, navigation needs are slightly different than those who are sighted,” he says. For example, a common way to provide directions to someone who is blind is to trace a map on the person’s hand. In this case, a robot’s otherness is an advantage: The team finds that people feel more comfortable doing this with a robot than a stranger because there is no social awkwardness.

“In our experience, people who are blind are very willing to interact with a robot, to touch its arms and hands.”

In the transit station scenario, robots could provide intelligent, personalized assistance to travelers with disabilities, freeing up Metro personnel for more complicated tasks better-suited to humans.

When what you see is what you want

Another important element in robot-human interaction is that of anticipation. Assistive technologies are learning to “read” humans and respond to their needs in more sophisticated ways.

[pullquote]Like the other NSF-funded projects, Walsh’s technologies are about improving people’s quality of life in subtle but critical ways.[/pullquote]

Xiaoli Zhang, an engineer at Colorado School of Mines, is developing a gaze-controlled robotic system that works in three dimensions to enable people with motor impairments to fetch objects by looking at them.

For instance, look at that smartphone. Need to retrieve it? The robot can tell when you do.

If a person intends to pick up a cup or smartphone, the natural thing to do is to look at it first. Zhang studies how people use their eyes to express intentions, then uses that data to fine-tune a system to control robotic movement through eye motion.

“We think gaze is unique because it is a naturally intuitive way for how people interact with the world,” she says. “If you’re thirsty, you look for a bottle of water. You need to look at it first before you manipulate it.”

Similar, existing systems are based on the amount of time someone looks at an item. But, as when checking the time on your watch, staring doesn’t always mean a desire to grasp. So, how does the robot know the difference?

Zhang is researching a pattern-based system that factors in more than gaze time. For example, blink rate and pupil dilation are closely related to people’s intent to manipulate an object.

More nuanced means of communications between humans and robots are necessary for them to be widely utilized in daily life.

Zhang is already looking ahead to the seamless integration of robotic assistants: “Eventually, everyone will be able to afford robots like everyone can afford computers.”

How many spin cycles can a robot survive?

For assistive technologies to fulfill their potential, they have to be the equivalent of machine washable. That is, they need to be convenient.

Walsh, whose NSF-funded projects include the development of asoft robotic exosuit and soft robotic glove — both wearable technologies to restore or enhance human movement — says affordability, comfort and convenience are important considerations in his research.

“It comes down to: ‘How do we apply as much force as possible in the most comfortable way?'” he says.

Like the other NSF-funded projects, Walsh’s technologies are about improving people’s quality of life in subtle but critical ways. He uses the analogy of a person on a swing.

“Think of someone swinging back and forth. You give them a little tap at the right time and they swing higher,” he says.

The same applies to soft robotic suits: “As someone is walking, we give them a little boost to walk farther, walk longer. If you want to go to the local store to buy something, put on a robotic suit to walk around. If you want to cook dinner, put on a glove that helps you be more dexterous.”

He focuses on minimalist, user-friendly systems that incorporate relatively new components in robotics: textiles, silicon and hybrid materials. (His lab is home to about seven sewing machines.)

Alexander Leonessa, program director of the NSF General and Age Related Disability Engineering program, says these projects are representative of how interdisciplinary, fundamental engineering research is leading to the development of new technologies, devices and software to improve the quality of life for people with disabilities.

It’s all in support of a new generation of robots — that don’t look like conventional robots — tailored to people who need assistance the most.