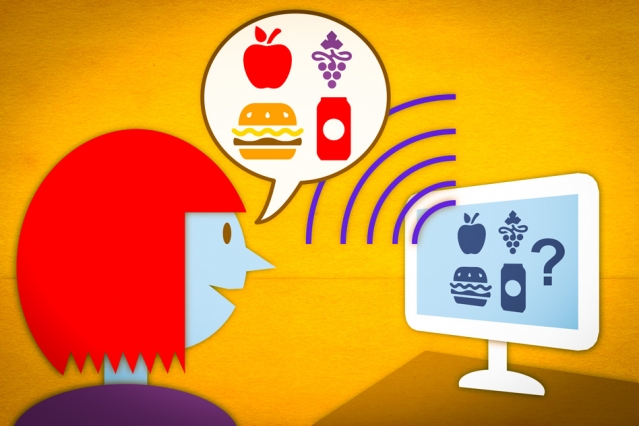

Spoken-language app makes meal logging easier, could aid weight loss.

Illustration: Jose-Luis Olivares/MIT

CAMBRIDGE, Mass. — For people struggling with obesity, logging calorie counts and other nutritional information at every meal is a proven way to lose weight. The technique does require consistency and accuracy, however, and when it fails, it’s usually because people don’t have the time to find and record all the information they need.

A few years ago, a team of nutritionists from Tufts University who had been experimenting with mobile-phone apps for recording caloric intake approached members of the Spoken Language Systems Group at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), with the idea of a spoken-language application that would make meal logging even easier.

This week, at the International Conference on Acoustics, Speech, and Signal Processing in Shanghai, the MIT researchers are presenting a Web-based prototype of their speech-controlled nutrition-logging system.

With it, the user verbally describes the contents of a meal, and the system parses the description and automatically retrieves the pertinent nutritional data from an online database maintained by the U.S. Department of Agriculture (USDA).

[pullquote]The researchers report the results of experiments with a speech-recognition system that they developed specifically to handle food-related terminology.[/pullquote]

The data is displayed together with images of the corresponding foods and pull-down menus that allow the user to refine their descriptions — selecting, for instance, precise quantities of food. But those refinements can also be made verbally. A user who begins by saying, “For breakfast, I had a bowl of oatmeal, bananas, and a glass of orange juice” can then make the amendment, “I had half a banana,” and the system will update the data it displays about bananas while leaving the rest unchanged.

“What [the Tufts nutritionists] have experienced is that the apps that were out there to help people try to log meals tended to be a little tedious, and therefore people didn’t keep up with them,” says James Glass, a senior research scientist at CSAIL, who leads the Spoken Language Systems Group. “So they were looking for ways that were accurate and easy to input information.”

The first author on the new paper is Mandy Korpusik, an MIT graduate student in electrical engineering and computer science. She’s joined by Glass, who’s her thesis advisor; her fellow graduate student Michael Price; and by Calvin Huang, an undergraduate researcher in Glass’s group.

Context sensitivity

In the paper, the researchers report the results of experiments with a speech-recognition system that they developed specifically to handle food-related terminology. But that wasn’t the main focus of their work; indeed, an online demo of their meal-logging system instead uses Google’s free speech-recognition app.

Their research concentrated on two other problems. One is identifying words’ functional role: The system needs to recognize that if the user records the phrase “bowl of oatmeal,” nutritional information on oatmeal is pertinent, but if the phrase is “oatmeal cookie,” it’s not.

The other problem is reconciling the user’s phrasing with the entries in the USDA database. For instance, the USDA data on oatmeal is recorded under the heading “oats”; the word “oatmeal” shows up nowhere in the entry.

To address the first problem, the researchers used machine learning. Through the Amazon Mechanical Turk crowdsourcing platform, they recruited workers who simply described what they’d eaten at recent meals, then labeled the pertinent words in the description as names of foods, quantities, brand names, or modifiers of the food names. In “bowl of oatmeal,” “bowl” is a quantity and “oatmeal” is a food, but in “oatmeal cookie,” oatmeal is a modifier.

Once they had roughly 10,000 labeled meal descriptions, the researchers used machine-learning algorithms to find patterns in the syntactic relationships between words that would identify their functional roles.

Semantic matching

To translate between users’ descriptions and the labels in the USDA database, the researchers used an open-source database called Freebase, which has entries on more than 8,000 common food items, many of which include synonyms. Where synonyms were lacking, they again recruited Mechanical Turk workers to supply them.

The version of the system presented at the conference is intended chiefly to demonstrate the viability of its approach to natural-language processing; it reports calorie counts but doesn’t yet total them automatically. A version that does is in the works, however, and when it’s complete, the Tufts researchers plan to conduct a user study to determine whether it indeed makes nutrition logging easier.